Add model card metadata

Browse filesThis PR adds metadata to the model card, including the pipeline tag (`image-text-to-text`), library name (`transformers`), and license (assumed `apache-2.0`, please verify). This improves discoverability and provides essential information for users.

README.md

ADDED

|

@@ -0,0 +1,77 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

library_name: transformers

|

| 4 |

+

pipeline_tag: image-text-to-text

|

| 5 |

+

---

|

| 6 |

+

|

| 7 |

+

# LongWriter-V: Enabling Ultra-Long and High-Fidelity Generation in Vision-Language Models

|

| 8 |

+

|

| 9 |

+

<p align="center">

|

| 10 |

+

🤗 <a href="https://huggingface.co/datasets/THU-KEG/LongWriter-V-22K" target="_blank">Train Dataset</a> • 🤗 <a href="https://huggingface.co/datasets/THU-KEG/MMLongBench-Write" target="_blank">Benchmark</a> • 🤗 <a href="https://huggingface.co/THU-KEG/LongWriter-V-7B-DPO" target="_blank">Model</a> • 📃 <a href="https://arxiv.org/abs/2502.14834" target="_blank">Paper</a>

|

| 11 |

+

</p>

|

| 12 |

+

|

| 13 |

+

## 🔍 Table of Contents

|

| 14 |

+

- [⚙️ LongWriter-V Deployment](#deployment)

|

| 15 |

+

- [🤖️ LongWriter-Agent-V](#agentwrite)

|

| 16 |

+

- [🖥️ Model Training](#longwriter-v-training)

|

| 17 |

+

- [📊 Evaluation](#evaluation)

|

| 18 |

+

- [👀 Cases](#case)

|

| 19 |

+

- [📝 Citation](#citation)

|

| 20 |

+

|

| 21 |

+

<a name="deployment"></a>

|

| 22 |

+

## ⚙️ LongWriter-V Deployment

|

| 23 |

+

|

| 24 |

+

**Environmental Setup**:

|

| 25 |

+

To inference Qwen2.5-VL based models, you may need to install transformers from source. Refer to this [issue](https://github.com/QwenLM/Qwen2.5-VL/issues/706) for more details.

|

| 26 |

+

|

| 27 |

+

We open-source three models: [LongWriter-V-7B](https://huggingface.co/THU-KEG/LongWriter-V-7B) and [LongWriter-V-7B-DPO](https://huggingface.co/THU-KEG/LongWriter-V-7B-DPO), trained based on [Qwen2.5-VL-7B-Instruct](https://huggingface.co/Qwen/Qwen2.5-VL-7B-Instruct) and [LongWriter-V-72B](https://huggingface.co/THU-KEG/LongWriter-V-72B), trained based on [Qwen2.5-VL-72B-Instruct](https://huggingface.co/Qwen/Qwen2.5-VL-72B-Instruct).

|

| 28 |

+

|

| 29 |

+

<a name="agentwrite"></a>

|

| 30 |

+

## 🤖️ LongWriter-Agent-V

|

| 31 |

+

|

| 32 |

+

We are also open-sourcing LongWriter-Agent-V under `agentwrite/`, our automated ultra-long output data construction pipeline. Run `outline_vlm.py` to obtain the final data. Please configure your API key in `config.py`.

|

| 33 |

+

|

| 34 |

+

<a name="longwriter-v-training"></a>

|

| 35 |

+

## 🖥️ Model Training

|

| 36 |

+

|

| 37 |

+

You can download and save the **LongWriter-V-22K** data through the Hugging Face datasets ([🤗 HF Repo](https://huggingface.co/datasets/THU-KEG/LongWriter-V-22K)).

|

| 38 |

+

|

| 39 |

+

You can train the model with [LLaMA-Factory](https://github.com/hiyouga/LLaMA-Factory), we used the [official Qwen2_VL training script](https://github.com/hiyouga/LLaMA-Factory/blob/main/examples/train_full/qwen2vl_full_sft.yaml) for training.

|

| 40 |

+

|

| 41 |

+

<a name="evaluation"></a>

|

| 42 |

+

## 📊 Evaluation

|

| 43 |

+

We introduce two evaluation benchmarks: [**MMLongBench-Write**](https://huggingface.co/datasets/THU-KEG/MMLongBench-Write) and [**LongWrite-V-Ruler**](https://huggingface.co/datasets/THU-KEG/LongWrite-V-Ruler). **MMLongBench-Write** focuses more on measuring the long output quality as well as the output length, while **LongWrite-V-Ruler** is designed as a light-weight stress test of the model's maximum output length.

|

| 44 |

+

We provide our evaluation code under `eval/`. Run

|

| 45 |

+

```bash

|

| 46 |

+

python -m eval.mmlongbench_write --model {model_name} --method {vlm, caption_llm}

|

| 47 |

+

python -m eval.longwrite_v_ruler --model {model_name}

|

| 48 |

+

```

|

| 49 |

+

to get evaluation resuts. Remember to configure your OpenAI API key in `config.py` since we adopt GPT-4o as the judge.

|

| 50 |

+

|

| 51 |

+

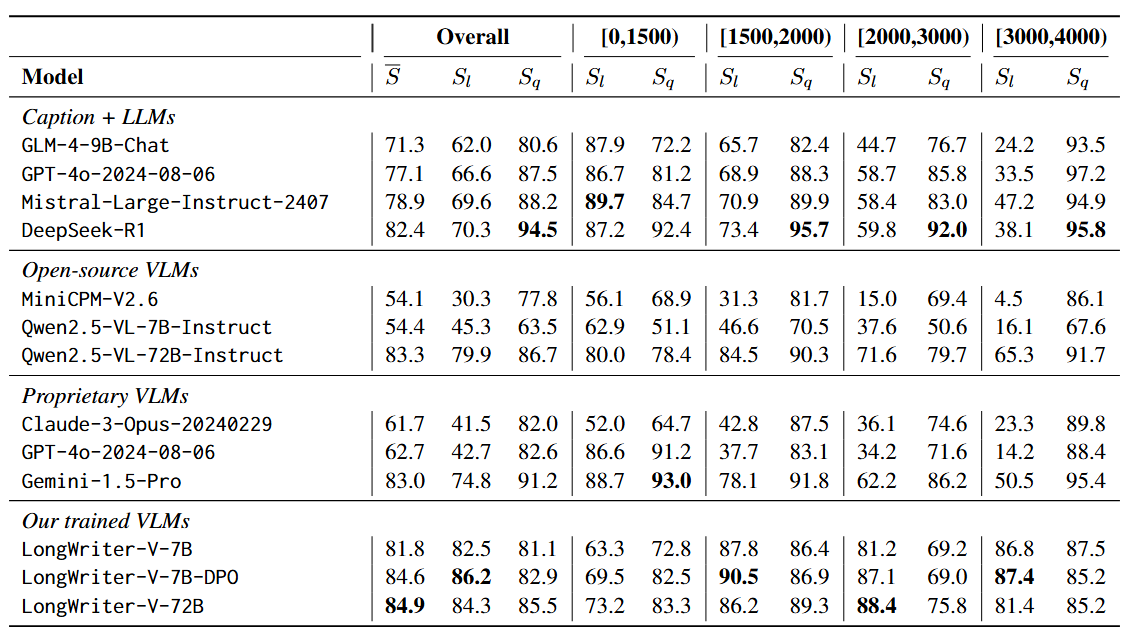

Here are the evaluation results on **MMLongBench-Write**:

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

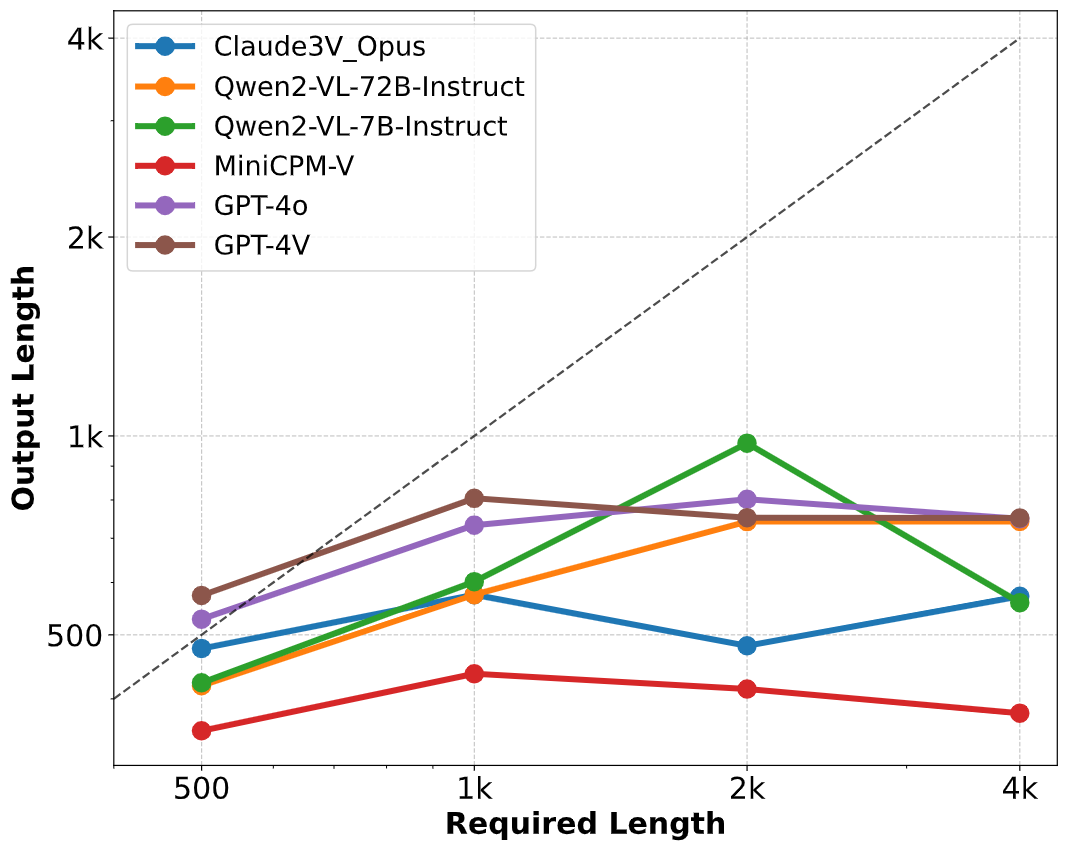

Here are the evaluation results on **LongWrite-V-Ruler**:

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

<a name="case"></a>

|

| 59 |

+

## 👀 Cases

|

| 60 |

+

Here are LongWriter-V-7B's outputs to random test prompts. (Examples truncated for brevity).

|

| 61 |

+

|

| 62 |

+

<a name="citation"></a>

|

| 63 |

+

## 📝 Citation

|

| 64 |

+

|

| 65 |

+

If you find our work useful, please kindly cite:

|

| 66 |

+

|

| 67 |

+

```

|

| 68 |

+

@misc{tu2025longwriterv,

|

| 69 |

+

title={LongWriter-V: Enabling Ultra-Long and High-Fidelity Generation in Vision-Language Models},

|

| 70 |

+

author={Shangqing Tu and Yucheng Wang and Daniel Zhang-Li and Yushi Bai and Jifan Yu and Yuhao Wu and Lei Hou and Huiqin Liu and Zhiyuan Liu and Bin Xu and Juanzi Li},

|

| 71 |

+

year={2025},

|

| 72 |

+

eprint={2502.14834},

|

| 73 |

+

archivePrefix={arXiv},

|

| 74 |

+

primaryClass={cs.CV},

|

| 75 |

+

url={https://arxiv.org/abs/2502.14834},

|

| 76 |

+

}

|

| 77 |

+

```

|